Using the bluetooth modules I have become pretty familiar with them. There are several different models out there but most of them are banging the BC417 core for bluetooth.

The idea was we could have speeds up to the at commands for baud which has a max of 1382400 baud. With that we could do 3.5 frames a second with raw at 251×100 at 2 bpp, of course in a perfect world with no overhead. Then I would move onto a camera that has built in jpeg compression and get a 1/16 compression ratio moving my fps to 56 fps at which time I could start to adjust for a bigger image and get closer to 20fps but with a larger image.

Wrong ! After setting the bluetooth to a higher baud 921600 I maxed out the kbps at 160 rx. This does not make any sense to me so I look at the modules specs and I see

Rate: Asynchronous: 2.1Mbps(Max) / 160 kbps.

There was another spec for synchronous but usart is async. I really dont know why these are written this way, maybe its 2.1Mbps upload and 160kbps down or maybe its 2.1Mbps on the usart side and 160kbps max on the bluetooth side or maybe its simply showing that the max is 2.1Mbps and we need at least 160kbps for the other side.

So I dove deeper into the bc417 datasheet it shows the uart can communicate as fast as 2764800, but if you look at the rfcomm section 9.2.1 you see it has a 350kbps max data rate. I have no idea how any of these chips could have data coming in at a huge rate and going out at a much slower one, maybe I would see buffer overflow if I pushed to much. Actually I think I did at about 700+ bytes.

Bottom line max kbps is 350 for what we are using, all I can seem to get out of it is 160kbps which may be a limitation of how they implemented the host mcu on the bluetooth module, but the datasheet for the bt module is confusing (to me). So even if we could get 350kbps that would be only .87 fps raw (a frame every 1.14 sec) or possibly ~13 fps with jpeg compression.

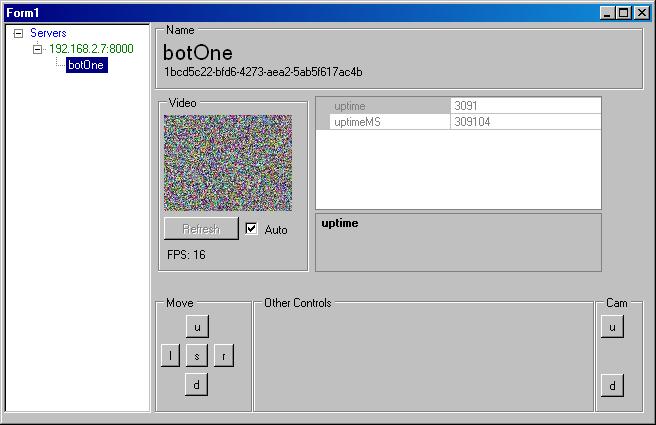

What I have working now is 160kbps at a decent range. That gives me .39fps or a frame every 2.56 sec which is ~5fps with compression. If I put my api on the computer and use a serial adapter I can get 921600 baud (max of prolific usb converter) and see 2fps. 2fps is much better than a frame every 2.56 sec, and it still sucks !

Options ?

I could use the nordic rf2401 chips and implement a dongle as I had in mind before. I know android devices are getting better at using usb converters and such. This would give me decent performance.

Rosberry pi with wifi.