Migration to F4

Since I’m a lazy sort I took the easy way out and bought a unit that had enough memory to actually support an image of at least QCIF resolution. I found an easy to use board on ebay that came with all kinds of modules I could just plug into the F4 discovery and get going, even camera. So, I think this was a good choice since my main downfall is lack of time for development.

All of the code has been migrated over and is working as it worked on the F3. I only have one ADC though. I have actually made a few improvements to the code and protocol. So now I have more speed and 192Kb to work with.

On another note I do plan on getting this working then possibly migrating a version to the stm32 F3. I could still use the F3 with dma based off gpio to control the camera. I realize now working with the F4 yeah its great and will get me where I want faster, but its also 3 times as much, and the F3 could do everything I need given the right amount of memory.

Easy to use Java API

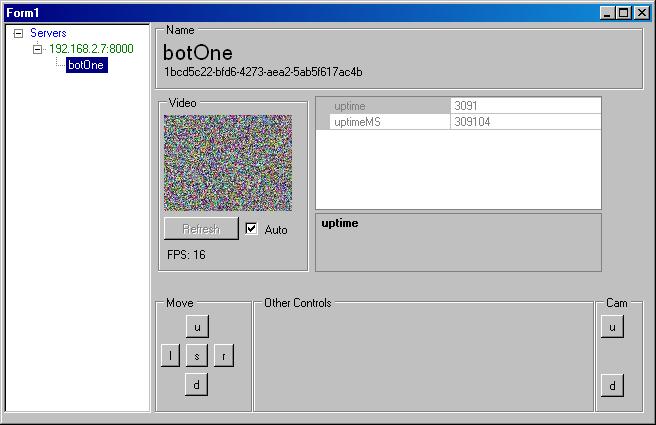

Somewhere along the line I decided to write code with full comments and extract everything that was OS based. I segregated and divided all objects into a format that only makes accessible to the user what they need. At one point I was convinced the bluetooth module was not working so I switched away from the android platform and in doing so confirmed the API works well in a windows environment also.

Basically this is how the API works.

- Create a new “zBot” passing it an input and output stream. This makes it transmission medium agnostic.

zBot = new ZBot( inS, outS );

- Then you simply wait for the bots state to be 2 or above meaning its ready. I will make this a enum soon.

- Then you can simply pull the values provided in the enum of “readValues”. For example this prints out all available vars, like adc, gpio, time etc.

for ( EzReadVars rv : EzReadVars.values())

{

this.logI(“Var: ” + rv + ” Value: ” + zBot.getValue(rv));

} - For the image you can either read out the bytes of the image or you can subscribe and get a callback when a new image gets pulled from the bot.

while(zBot.getImageState() != EzImageState.Ready){}

int width = zBot.getValue(EzReadVars.VideoWidth);

int height = zBot.getValue(EzReadVars.VideoHeight);

//int bpp = zBot.getValue(EzReadVars.v);

byte[] pic = zBot.getRawFrame();

This is cross platform and pretty easy to use, but by far not done. I really want to tie down everything into enums and document everything. Then I’ll make an example for android, windows and linux and release those. This will give people everything they need to get started with the API.

Video in progress

I’m so tired of saying this. The video is by far the hardest part for me. I finally have good data coming out at 251×100 resolution at rgb 565. I dont know why the camera is set at this resolution, but through painstakingly slow testing I have found it’s at this resolution. This resolution is something I can work with at least for now. It might actually be higher than 100 but I stopped the dcmi transfer at 100 lines.

So now I’m seeing beyond that to the fact that dma spitting my image out usart while dcmi is spitting my image into the same memory does not seem to work. I think they are conflicting when they hit the bus. So I’m going to implement double buffering. To do this I have to add some code to the zbot hw code and add some code to the java side also. Basically it will take a pic on one side and when we send it an update it will fill the picture in the “other side” so this way we can pull image A then hit “update” then pull image B then hit update and so on. This way the unit is always taking a snapshot and writing it to the picture buffer we just received. This should not be too hard.

Here is a picture of color bars at 251×100 resolution that I pulled. At least now I can crank up the speed and start to get my actual fps.