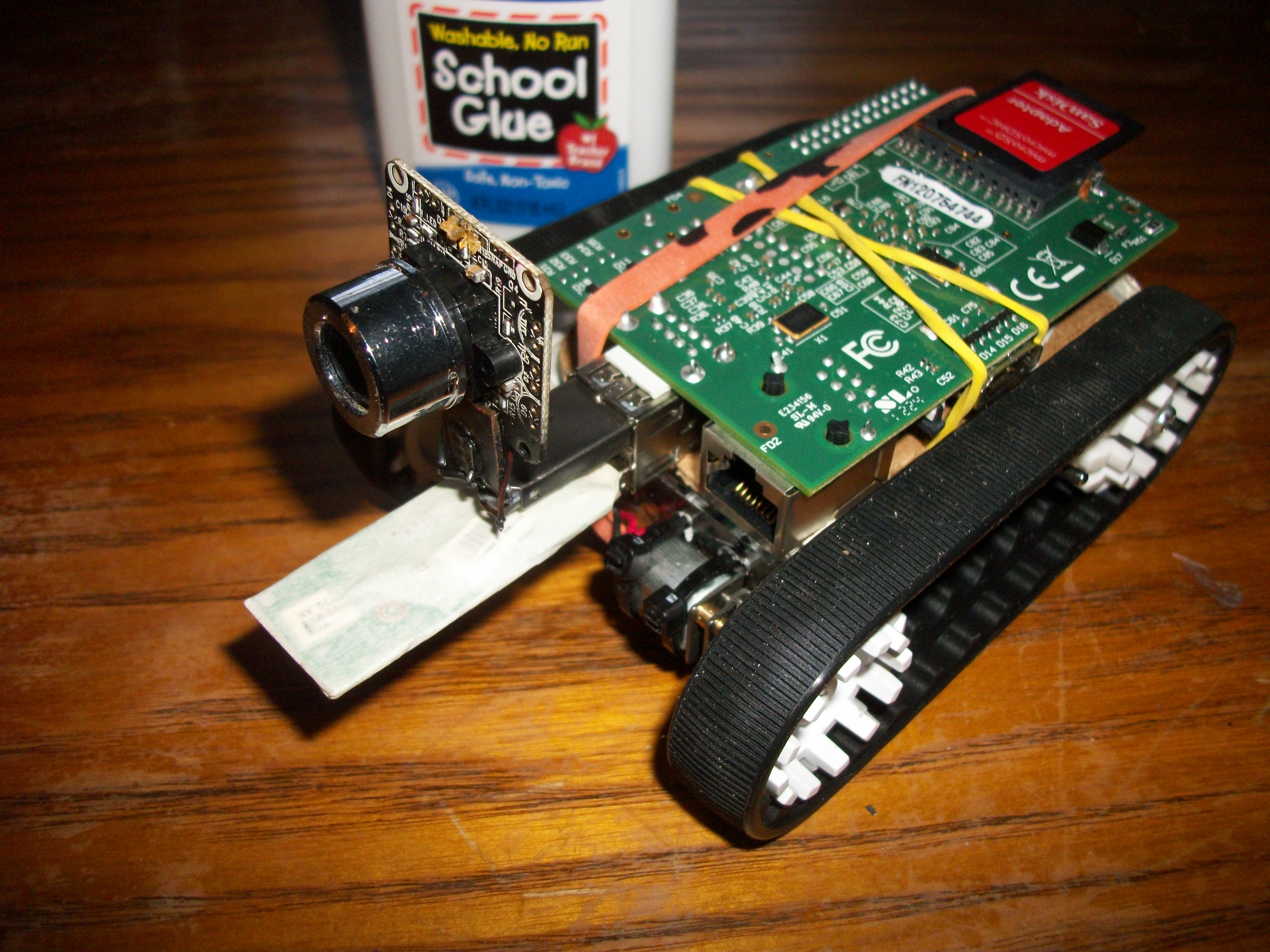

Using my model B with a usb video camera was fun but with the new camera module and the cheaper model A I wanted to give those a go.

Parts:

rPi model A (no ethernet, one usb) $25

Camera Module for rPi $25

RTL8188cus wifi dongle (<$5)

Something to drive around

Circuitry to drive motors

Circuitry to tell you when batteries are low http://zonerobotics.com/wordpress/?p=257

Connect Over Serial:

To do this I used a usb<->uart converter plugged directly into the rPi as the pinout describes here http://elinux.org/RPi_Low-level_peripherals. Using 115k, 8n1 and a putty session.

Connect wifi:

http://learn.adafruit.com/adafruits-raspberry-pi-lesson-3-network-setup/setting-up-wifi-with-occidentalis

Update rPi, setup Camera files:

http://www.tweaktown.com/guides/5617/raspberry-pi-camera-module-review-and-tutorial-guide/index2.html

Free up some space (I’m on a 2 gig sd)

This will ruin X11/gui interface. You might want a bigger sd if you still need that, I dont want it for now.

sudo apt-get remove `sudo dpkg --get-selections | grep -v "deinstall" | grep x11 | sed s/install//`Streaming from the Camera Module:

Looking around I see no /dev/video0. So upon looking through the vast internet I dont think there is a video4linux driver. How lame, I thought this would be plug and play. I’ve written my own cmos camera drivers and broadcast them through bluetooth with the stm32 it’s not trivial. I was hoping for a simpler solution being that this camera was expensive and that the pi is a powerhouse compared to my other units. See this article for more details about why it does not work and some ways that it might

http://raspberrypi.stackexchange.com/questions/7446/how-can-i-stream-h264-video-from-raspberry-camera-module-via-apache-nginx-for-re

This page has a more optimistic output with three different ways to stream.

http://www.mybigideas.co.uk/RPi/RPiCamera/

First thing I had to install VLC, ouch 132mb gone. Then I tried

raspivid -o - -t 9999999 |cvlc -vvv stream:///dev/stdin --sout '#rtp{sdp=rtsp://:8554/}' :demux=h264

The latency was really bad, seemed like minutes till I actually got the video. It kept restarting over and over and hitting this error

[0xd01d28] main input error: ES_OUT_SET_(GROUP_)PCR is called too late (pts_delay increased to 1953 ms) [0xd01d28] main input error: ES_OUT_RESET_PCR called

So I found this link that talks about “low latency” gstreamer and I was using gstreamer on my linux box the other day with much interest so I was pulled more to this.

Before installing gstreamer I deleted more stuff with apt-get remove as in the above article. Left me with about 300mb, I didnt uninstall vlc since it was the only thing I have going so far. Gstreamer was 154mb, these packages are huge. Here goes another 20 minutes waiting for that.

Fought with this for a while, I could never get a client to work with gstreamer. Tried vlc again with slower/smaller settings and it was just as crappy.

VLC Sorta Success:

After working with these tools for a long time I got this to work pretty well. Its smooth fast and no freezing

raspivid -t 999999 -h 600 -w 800 -fps 30 -hf -b 2000000 -o - |cvlc -vvv stream:///dev/stdin --sout '#standard{access=http,mux=ts,dst=:8080}' :demux=h264

I tried to make it smaller and less framerate/size/bitrate each time it got worse and worse. With a smaller bitrate/fps it would freeze and disconnect, which does not make any sense to me, with less data it should work better right ? But I think it has to do more processing if you scale it in any way which hogs up the cpu and screws everything up. I read tons of articles the last one was this

http://www.raspberrypi.org/phpBB3/viewtopic.php?f=43&t=43969

Making Webpage:

Installed apache then used this on a video.html page

<!DOCTYPE html> <html><body> <OBJECT classid="clsid:9BE31822-FDAD-461B-AD51-BE1D1C159921" codebase="http://downloads.videolan.org/pub/videolan/vlc/latest/win32/axvlc.cab" width="800" height="600" id="vlc" events="True"> <param name="Src" value="http://PI_IP_ADDRESS:8080/" /> <param name="ShowDisplay" value="True" /> <param name="AutoLoop" value="False" /> <param name="AutoPlay" value="True" /> <embed id="vlcEmb" type="application/x-google-vlc-plugin" version="VideoLAN.VLCPlugin.2" autoplay="yes" loop="no" width="640" height="480" target="http://PI_IP_ADDRESS:8080/" ></embed> </OBJECT> </html></body>

Latency !!:

I’m getting like 4sec of latency which ruins everything. I had much better results with the usb cam. However these people seem to think it will work much faster with “no latency” so I’m going to try their netcat solution later

http://www.raspberrypi.org/phpBB3/viewtopic.php?f=43&t=39996&start=50#p341674

Yes at least 3 second delay. No change in delay with bitrate. Looking at the nc solution this is the most complete example I can find (first line run on pi, second on windows)

raspivid -t 999999 -o - | nc 192.168.1.76 5001

c:appsncnc.exe -L -p 5001 | c:appsmplayermplayer.exe -fps 31 -cache 1024 -

My exact example was

On the Pi:

raspivid -t 999999 -vf -hf -b 2000000 -o - | nc 192.168.2.17 5001

Then on the machine (where I had to download mplayer and all the codecs)

raspivid -t 999999 -vf -hf -b 2000000 -o - | nc 192.168.2.17 5001

This would start off just like the rest but after about a minute of running the latency was gone. There is maybe a 200-300ms latency. I could snap my fingers and see it way before I could say “one Mississippi” (very scientific).

Conclusion:

I don’t get it. I read online about all these “it does not have enough power” etc. etc. My stm32 could pump data out via the DMA as fast as we could process it.

- Video is shaky, camera is stable but it looks like what you are looking at is in a earthquake

- Motion is frequently blurred and pixelated, smearing across the screen

- Camera is much more expensive than a usb solution

- All normal ways of streaming have a 3+ second latency, some other methods have less latency (netcat) but they are not reliable and seem to crash all the sudden. Plus they are not streaming into a webpage or anything simple.

- reducing bitrate or resolution just seems to make things worse

If I have to choose between this and a simple usb camera I was having lots more luck with usb camera. I can see the application of the raspberry pi camera in maybe a webcam or something that takes pictures, but as eyes for a teleop bot the usb camera may not be as HQ but it also did not smear, shake, lag or cost as much !